It’s not a secret that a significant part of SEO is a ‘black box.’ While we can assume that some strategies work, unfortunately, predicting the impact of each action is challenging. Meanwhile, more and more frequently, creating forecasts becomes necessary to convince stakeholders to prioritize actions.

This is why testing is a great way to verify what truly works in your niche, creating the basis for business cases. It also helps to discard ideas that don’t work for your website, making room for something better.

Now, what is A/B testing? Generally speaking, A/B testing is a way of comparing two (or more) versions of the same page/resource/component to determine which one performs better. This is achieved by presenting these variants to different users and comparing the behavior of two groups.

However, as you can already guess, it wouldn’t work in SEO. You don’t randomly show different versions of the same page to Google Bot, hoping for it to convert better. So, SEO testing is pretty different from normal A/B testing:

- The experimental unit, on which the test is conducted, is not a user but Google Bot.

- You cannot test your solution on different audiences randomly. In SEO, you have only one variation per URL.

- Results are not immediate. Google needs time to detect changes and reindex the page.

- SEO testing typically aims to influence visits, clicks, impressions, etc., rather than conversions.

So, while there is no real A/B testing in SEO, it doesn’t mean we can’t run other types of experiments.

Types Of “A/B” Tests In SEO

Although there is a huge variety of experimentation types, the next two are probably most useful for SEO:

Before / After Tests

The easiest way to do testing in SEO and see if the optimization worked is to check the performance of the page (query / section etc.) before and after the change was implemented.

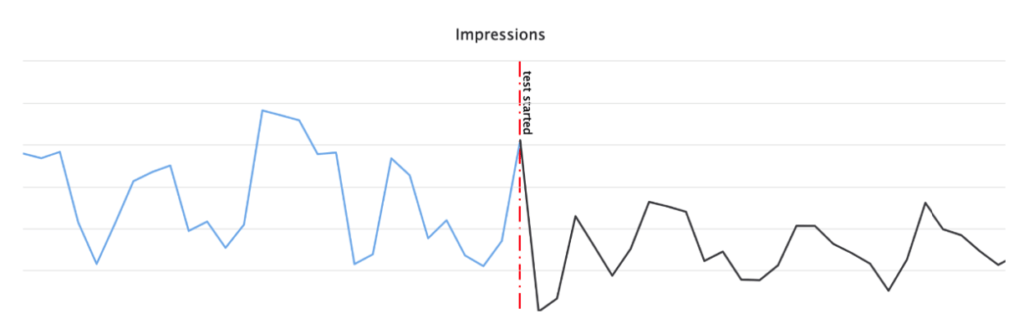

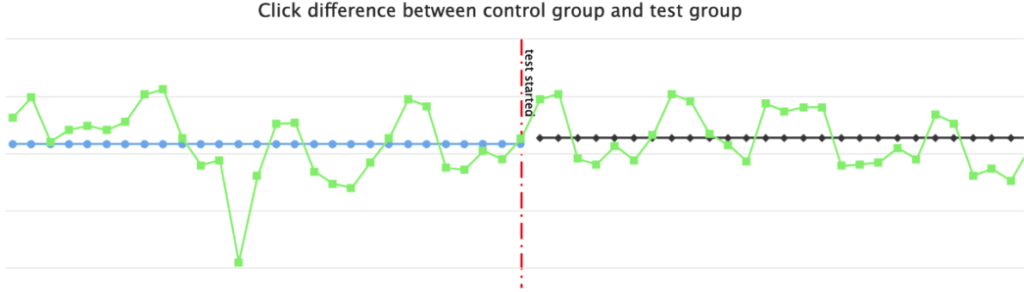

Screenshot from SEOTesting tool

Advantages:

- Easy to perform.

- Doesn’t require additional tools: you can calculate the impact manually.

- Suitable for any websites: big and small.

- Simple for testing redirection results.

Disadvantages:

- Results might vary based on seasonality or other factors.

- Results might be circumstantial and not representative.

Split Testing

Split testing is the closest you can get to traditional A/B testing in the context of SEO. Here’s how it works: you pick a test group and a control group of URLs. The test group gets the changes, while the control group stays the same. Then, you compare how well they perform.

If there’s a positive change, you can apply the feature to all relevant pages. If it’s negative, you can undo the changes.

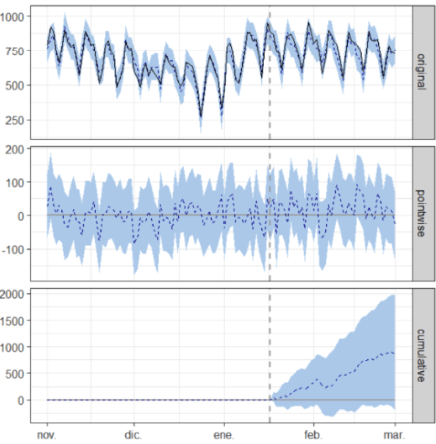

SEO split tests often use Causal Impact Methodology. Simply put, it compares what would happen without the change to what happened after the change.

Screenshot from SEOTesting tool

Advantages:

- More reliable results compared to before/after tests.

- Suitable for large websites.

- Allows roll-back without significant traffic loss if the action doesn’t work as expected.

- Facilitates data-driven decision-making.

Disadvantages:

- Often requires extra work for the technical teams.

- Not all elements can be personalized across the same type of pages.

- Requires A/B testing tools.

- Difficult to perform on small websites.

Tools for SEO A/B testing:

Considering the specifics, not all A/B testing tools are suitable for SEO. Fortunately, there are some that can help you get started easily and without any coding experience:

- SEO Testing https://seotesting.com/

- Search Pilot https://www.searchpilot.com/

- SplitSignal by SEMRush https://www.semrush.com/splitsignal/

For Split testing, you can also use Causal Impact in R if you prefer to do the calculations yourself.

Screenshot of CausalImpact in R

What Can You Test In SEO?

Honestly, almost anything. Here are just a few ideas:

- Titles and Meta descriptions

- On-page content: headers, editorial texts etc.

- Schema mark-up and/or SERP features

- Internal linking

- Backlinks

- EEAT signals

- Image ALT texts

- Migrated pages: to ensure there is no traffic drop

Alright, now that we’ve covered the basics, I think we are ready now to start experimenting!

The SEO A/B Testing Steps

(We’ll review each one closely later)

1. Define the test

2. Launch the test

3. Evaluate results after the given timeline

4. Do roll-out / roll-back (if necessary)

5. Iterate

1. Define The Test

The preparation phase is the most important one. At this step, you have to decide:

- What would you like to test?

Got any ideas for improvements? How about changing titles / adding FAQs or maybe diversifying anchor texts?

- What result are you expecting (what’s your hypothesis)? Which metrics will you use to measure it?

Once you’ve chosen what to test, consider how to measure it. Do you anticipate more clicks, visits, or something else? Here are some options:

- Clicks

- Impressions

- Visits

- CTR

- Positions

- Decide what change you want to test.

Design the new version or feature you think might work. For instance, it could involve adding a different keyword in the title or including a new text blog on the page. Get specific about what your change proposal is.

- Choose which URLs you want to test the new features on.

Consider factors like pagetype, topic/section, and current performance. Check how many URLs on your website could benefit from this change, ensuring you have enough pages for reliable results of the experiment.

The number of URLs you need for the test depends on your site’s size, traffic, and other factors. While there’s no fixed formula, you can start with 20-50 pages and increase their amount if necessary. Opt for URLs with high traffic if possible. Remember, the more URLs and visits, the higher the probability your result is reliable.

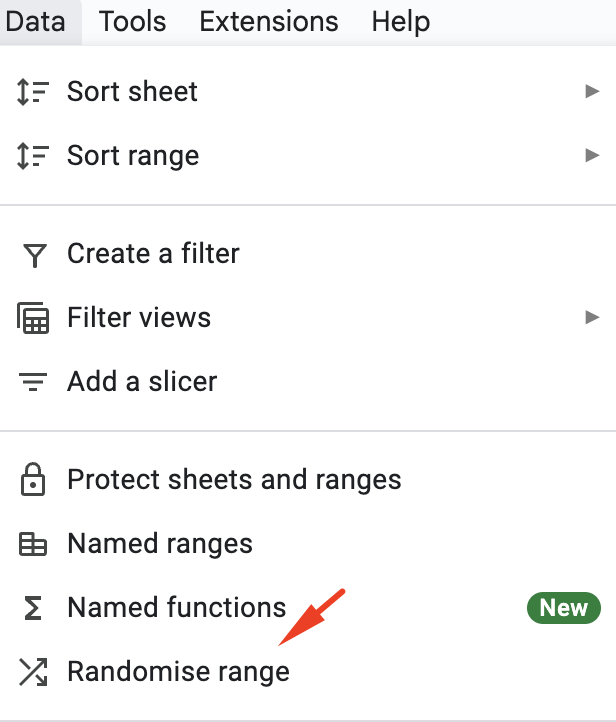

For split testing, you need two groups: variant and control. Create a list of URLs meeting your criteria. Aim for 40-100 URLs, twice as many as a before/after test. Use Google Sheets to randomize and split the list into two groups. The first is your variant group (where you implement changes), and the second is the control group (left untouched).

Screenshot from Google Sheets

Important: start the test as soon as you define the URLs to prevent changes in important metrics, ensuring the test remains objective.

- What will be the timeline?

Decide how long you want your test to run—this is your timeline. Keep in mind that SEO takes time, so plan for a few weeks. While there are no specific guidelines, I typically use a timeframe of 4 to 8 weeks, depending on the feature tested. This duration allows Google enough time to notice the change and reindex it.

2. Launch The Test

Now, put your changes into action and wait to see the results.

3. Evaluate Results After The Given Timeline

Once the test is live, use the tools mentioned earlier to keep track of the results. Review the outcomes after the test period ends, analyze the data, and make informed decisions.

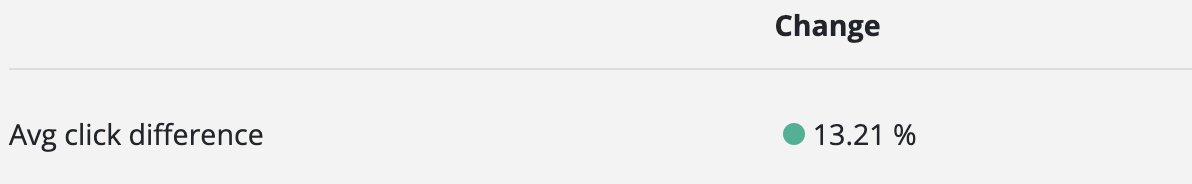

How can you tell if the test was successful or not? It’s straightforward. Look for changes in traffic or other metrics you aimed to improve on variant pages compared to the control in split tests, and over time in before/after tests. The SEO experimentation tools will make it easy for you.

Screenshot from SEO testing tool

If the results neither lean positive nor negative, consider rolling back the feature (unless avoiding negative impacts was your goal). It’s likely that the tested element doesn’t significantly impact SEO, making it not worth implementing.

4. Roll-Out / Roll-Back (If Necessary)

Based on whether you see positive or negative impacts, you need to decide whether to proceed with the roll-out of the test on the remaining eligible pages or roll-back. In the case of “before/after” experiments where the solution is already applied to all pages, a roll-out might not be necessary (though a roll-back is still an option if things went wrong).

5. Iterate

Regularly review the implemented changes and brainstorm ways to improve and expand the solution.

Common Mistakes to Avoid in SEO A/B Testing

- Finishing the test too quickly.

Make sure you give Google Bot enough time to notice the changes and reindex the pages.

- Choosing the wrong moment to test.

Keep in mind external factors like holidays, which can affect results.

- Testing various hypotheses at the same time.

In order to understand what worked, you need to test one thing at a time. This way, you can avoid situations where one change has a positive impact, while another has a negative one.

- Not having a sufficient sample of pages.

To ensure reliable results, it’s essential to have a sufficient number of pages in your test. Testing with only 6 pages, each receiving 1-5 impressions and 0 clicks, won’t provide enough data to draw meaningful conclusions.

- Picking URLs that are not similar.

Selecting URLs that are too different can lead to unreliable results. Since we can’t randomly show various versions to users, any differences between test and control groups will be linked to the implemented change. Choosing URLs with too many varying factors may result in unreliable outcomes, leading to incorrect assumptions.

- Assuming that the same solution will have the same impact across all types of pages.

Always keep in mind that the tested solution might not work for another website or even within different sections of the same website. Ensure you conduct a new test before making any decision.

If you’re new to SEO testing, it might seem a bit confusing, so why bother with experimentation? Well, it’s one of the few ways in SEO to gather data-driven insights and figure out what truly works in your sector at the moment. Often, we rely on past successes, while Google’s algorithm evolves, and what worked yesterday might not work today. SEO testing is a step toward embracing a data-driven culture.

Daria Miroshnichenko

Note: this article contains only my personal research and opinion:)

Sources and recommended literature:

Practical Guide to Controlled Experiments on the WebR

R. Kohavi - Trustworthy Online Controlled Experiments: A Practical Guide to A/B Testing